Nvidia’s following-technology graphics processor for synthetic intelligence, named Blackwell, will value among $30,000 and $40,000 for each device, CEO Jensen Huang instructed CNBC’s Jim Cramer.

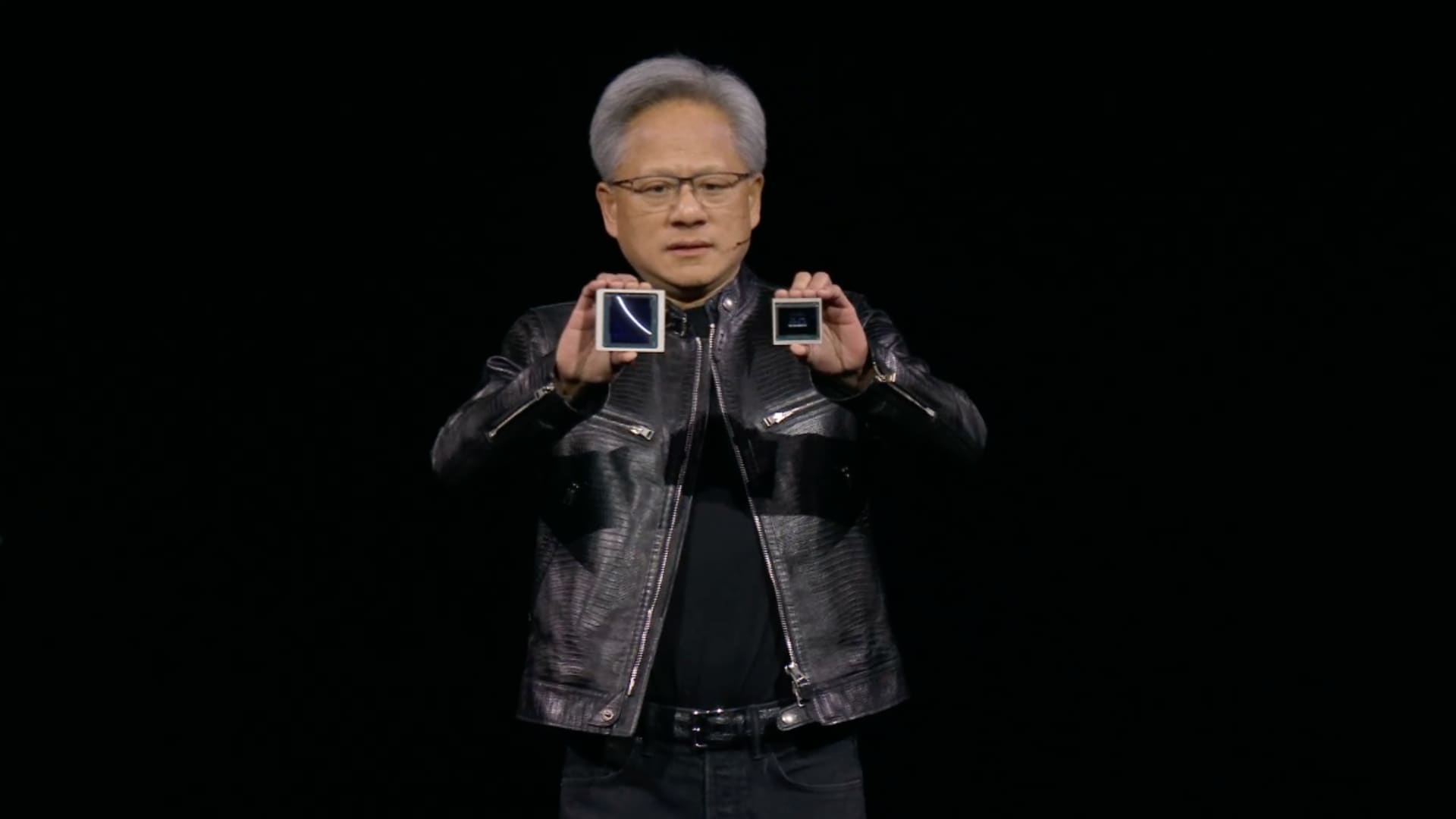

“This will price $30 to $40 thousand bucks,” Huang reported, holding up the Blackwell chip.

“We had to invent some new know-how to make it feasible,” he ongoing, estimating that Nvidia used about $10 billion in investigation and development fees.

The price tag suggests that the chip, which is possible to be in very hot demand for coaching and deploying AI software like ChatGPT, will be priced in a comparable array to its predecessor, the H100, or the “Hopper” technology, which charge amongst $25,000 and $40,000 for every chip, according to analyst estimates. The Hopper generation, introduced in 2022, represented a significant cost improve for Nvidia’s AI chips over the earlier technology.

Nvidia CEO Jensen Huang compares the sizing of the new “Blackwell” chip versus the recent “Hopper” H100 chip at the company’s developer convention, in San Jose, California.

Nvidia

Nvidia announces a new technology of AI chips about each individual two decades. The most up-to-date, like Blackwell, are normally more rapidly and far more vitality effective, and Nvidia uses the publicity all-around a new technology to rake in orders for new GPUs. Blackwell brings together two chips and is bodily much larger than the previous-generation.

Nvidia’s AI chips have pushed a tripling of quarterly Nvidia product sales given that the AI boom kicked off in late 2022 when OpenAI’s ChatGPT was declared. Most of the major AI firms and builders have been utilizing Nvidia’s H100 to prepare their AI products more than the earlier year. For instance, Meta is acquiring hundreds of hundreds of Nvidia H100 GPUs, it stated this year.

Nvidia does not reveal the record selling price for its chips. They come in numerous unique configurations, and the price an close customer like Meta or Microsoft could possibly spend depends on things these kinds of as the quantity of chips acquired, or irrespective of whether the consumer purchases the chips from Nvidia right by a entire procedure or by means of a seller like Dell, HP, or Supermicro that builds AI servers. Some servers are developed with as numerous as 8 AI GPUs.

On Monday, Nvidia announced at minimum three distinct versions of the Blackwell AI accelerator — a B100, a B200, and a GB200 that pairs two Blackwell GPUs with an Arm-primarily based CPU. They have a little bit diverse memory configurations and are anticipated to ship later on this 12 months.