Nvidia is on a tear, and it will not feel to have an expiration day.

Nvidia makes the graphics processors, or GPUs, that are desired to create AI apps like ChatGPT. In certain, you can find extraordinary demand from customers for its highest-conclusion AI chip, the H100, among the tech companies right now.

Nvidia’s overall profits grew 171% on an annual basis to $13.51 billion in its next fiscal quarter, which ended July 30, the company announced Wednesday. Not only is it advertising a bunch of AI chips, but they’re extra profitable, far too: The company’s gross margin expanded more than 25 share factors compared to the very same quarter previous calendar year to 71.2% — unbelievable for a bodily item.

Plus, Nvidia stated that it sees need remaining superior by means of subsequent yr and claimed it has secured improve source, enabling it to increase the quantity of chips it has on hand to offer in the coming months.

The firm’s stock rose additional than 6% just after hrs on the information, incorporating to its extraordinary get of more than 200% this calendar year so considerably.

It can be obvious from Wednesday’s report that Nvidia is profiting much more from the AI boom than any other company.

Nvidia reported an extraordinary $6.7 billion in net cash flow in the quarter, a 422% raise over the same time last year.

“I imagine I was substantial on the Street for up coming calendar year coming into this report but my quantities have to go way up,” wrote Chaim Siegel, an analyst at Elazar Advisors, in a be aware following the report. He lifted his price tag concentrate on to $1,600, a “3x go from listed here,” and explained, “I nevertheless believe my quantities are way too conservative.”

He explained that price indicates a many of 13 periods 2024 earnings for every share.

Nvidia’s prodigious cashflow contrasts with its best buyers, which are paying intensely on AI hardware and creating multi-million dollar AI styles, but have not however started out to see money from the technologies.

About fifty percent of Nvidia’s info heart profits comes from cloud companies, followed by big online providers. The advancement in Nvidia’s data centre small business was in “compute,” or AI chips, which grew 195% throughout the quarter, additional than the total business’s growth of 171%.

Microsoft, which has been a huge customer of Nvidia’s H100 GPUs, the two for its Azure cloud and its partnership with OpenAI, has been growing its money expenses to make out its AI servers, and does not expect a positive “revenue signal” until upcoming year.

On the shopper internet front, Meta claimed it expects to shell out as a lot as $30 billion this 12 months on information facilities, and maybe much more future calendar year as it functions on AI. Nvidia stated on Wednesday that Meta was observing returns in the kind of elevated engagement.

Some startups have even gone into credit card debt to get Nvidia GPUs in hopes of renting them out for a income in the coming months.

On an earnings call with analysts, Nvidia officers gave some standpoint about why its data heart chips are so profitable.

Nvidia said its software contributes to its margin and that it is advertising a lot more challenging products and solutions than mere silicon. Nvidia’s AI application, called Cuda, is cited by analysts as the major rationale why clients can not quickly switch to rivals like AMD.

“Our Information Centre merchandise consist of a important volume of software package and complexity which is also serving to for gross margins,” Nvidia finance main Colette Kress stated on a get in touch with with analysts.

Nvidia is also compiling its technology into high priced and sophisticated programs like its HGX box, which brings together 8 H100 GPUs into a one computer. Nvidia boasted on Wednesday that creating a person of these containers uses a provide chain of 35,000 parts. HGX containers can price tag all-around $299,999, in accordance to experiences, vs . a quantity value of involving $25,000 and $30,000 for a single H100, according to a current Raymond James estimate.

Nvidia explained that as it ships its coveted H100 GPU out to cloud assistance companies, they are normally opting for the a lot more entire procedure.

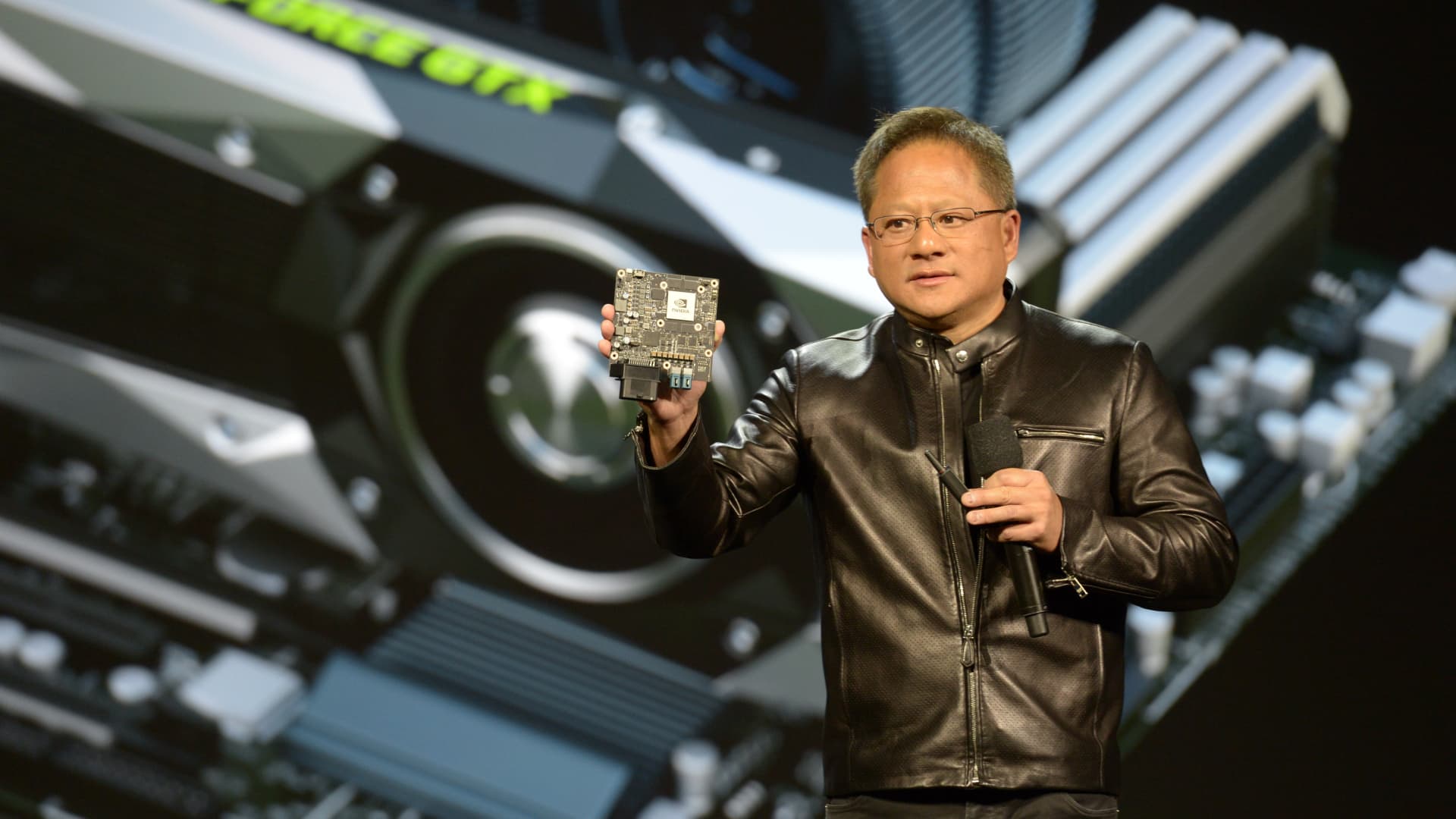

“We connect with it H100, as if it can be a chip that will come off of a fab, but H100s go out, really, as HGX to the world’s hyperscalers and they’re seriously pretty substantial program elements,” Nvidia CEO Jensen Huang said on a contact with analysts.