Jensen Huang, president of Nvidia, holding the Grace hopper superchip CPU used for generative AI at the Supermicro keynote presentation during Computex 2023.

Walid Berrazeg | Lightrocket | Getty Pictures

Nvidia on Monday unveiled the H200, a graphics processing unit developed for schooling and deploying the forms of synthetic intelligence types that are powering the generative AI increase.

The new GPU is an enhance from the H100, the chip OpenAI employed to coach its most advanced large language product, GPT-4. Massive businesses, startups and government organizations are all vying for a minimal supply of the chips.

H100 chips price tag among $25,000 and $40,000, in accordance to an estimate from Raymond James, and 1000’s of them operating jointly are essential to build the largest models in a course of action termed “training.”

Excitement in excess of Nvidia’s AI GPUs has supercharged the company’s inventory, which is up far more than 230% so much in 2023. Nvidia expects close to $16 billion of revenue for its fiscal 3rd quarter, up 170% from a yr ago.

The crucial enhancement with the H200 is that it features 141GB of upcoming-era “HBM3” memory that will assist the chip complete “inference,” or applying a massive design after it really is qualified to crank out text, pictures or predictions.

Nvidia explained the H200 will deliver output just about 2 times as speedy as the H100. Which is dependent on a check utilizing Meta’s Llama 2 LLM.

The H200, which is envisioned to ship in the next quarter of 2024, will contend with AMD’s MI300X GPU. AMD’s chip, identical to the H200, has further memory in excess of its predecessors, which will help in shape major designs on the hardware to operate inference.

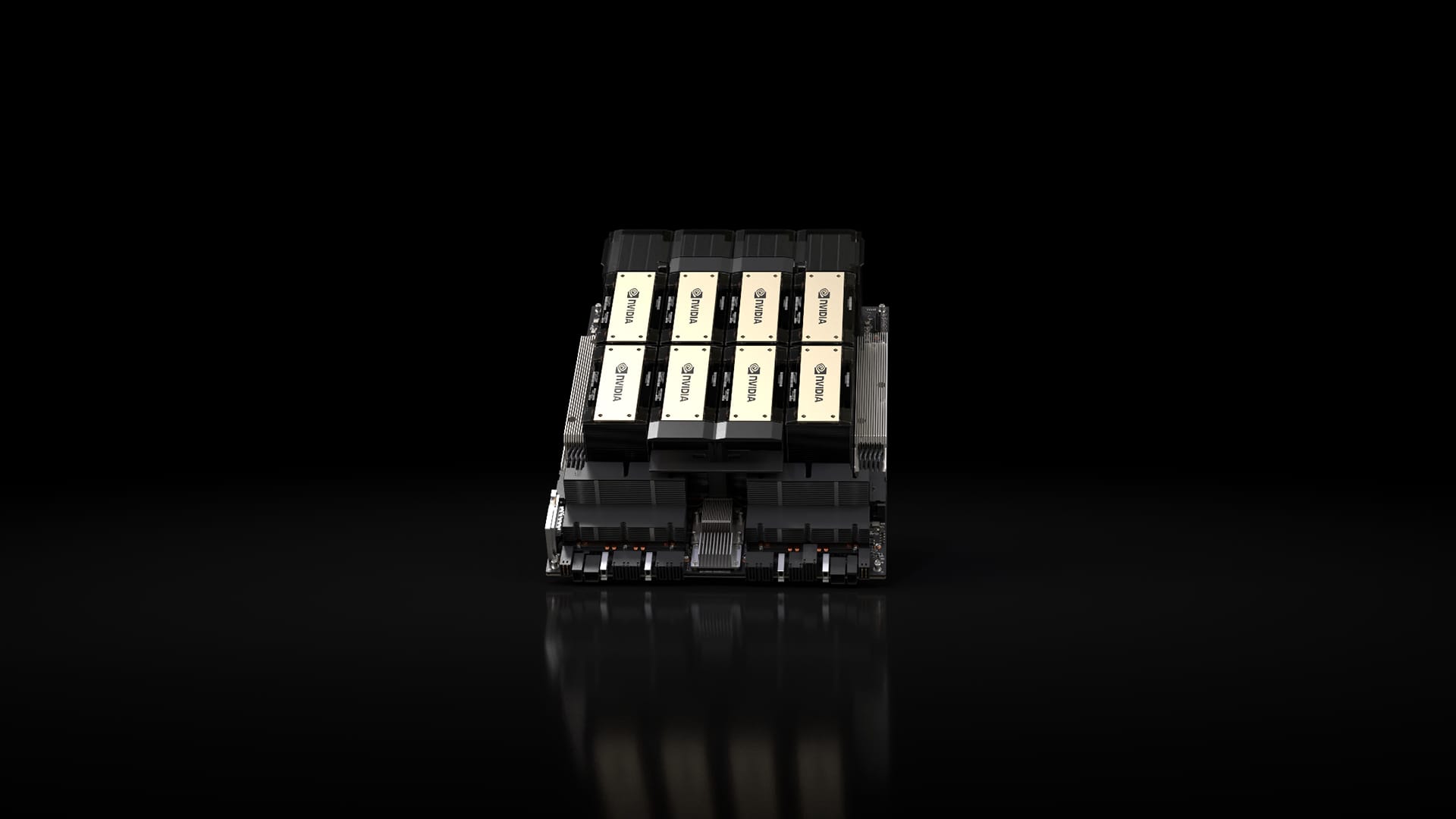

Nvidia H200 chips in an 8-GPU Nvidia HGX technique.

Nvidia

Nvidia explained the H200 will be appropriate with the H100, indicating that AI organizations who are previously teaching with the prior design won’t need to modify their server methods or application to use the new variation.

Nvidia suggests it will be offered in 4-GPU or eight-GPU server configurations on the firm’s HGX complete programs, as properly as in a chip referred to as GH200, which pairs the H200 GPU with an Arm-primarily based processor.

On the other hand, the H200 may possibly not keep the crown of the quickest Nvidia AI chip for extended.

While companies like Nvidia provide many various configurations of their chips, new semiconductors frequently take a major action forward about just about every two yrs, when producers go to a various architecture that unlocks more major general performance gains than incorporating memory or other smaller sized optimizations. The two the H100 and H200 are centered on Nvidia’s Hopper architecture.

In October, Nvidia advised traders that it would transfer from a two-12 months architecture cadence to a a person-calendar year launch pattern thanks to high desire for its GPUs. The organization exhibited a slide suggesting it will announce and release its B100 chip, dependent on the forthcoming Blackwell architecture, in 2024.

Look at: We’re a huge believer in the AI craze heading into upcoming 12 months

Do not pass up these stories from CNBC Professional: