Nvidia CEO Jensen Huang

Getty

Nvidia on Monday announced a new technology of synthetic intelligence chips and computer software for jogging AI designs. The announcement, produced during Nvidia’s developer’s convention in San Jose, arrives as the chipmaker seeks to solidify its posture as the go-to supplier for AI corporations.

Nvidia’s share selling price is up five-fold and full product sales have more than tripled because OpenAI’s ChatGPT kicked off the AI increase in late 2022. Nvidia’s high-conclusion server GPUs are important for teaching and deploying massive AI models. Corporations like Microsoft and Meta have used billions of dollars getting the chips.

The new technology of AI graphics processors is named Blackwell. The to start with Blackwell chip is termed the GB200 and will ship later this yr. Nvidia is engaging its clients with far more effective chips to spur new orders. Organizations and software package makers, for instance, are still scrambling to get their palms on the present-day generation of “Hopper” H100s and identical chips.

“Hopper is excellent, but we will need larger GPUs,” Nvidia CEO Jensen Huang reported on Monday at the company’s developer convention in San Jose, California.

The organization also launched profits-making application named NIM that will make it easier to deploy AI, providing buyers another cause to stick with Nvidia chips over a increasing field of opponents.

Nvidia executives say that the organization is starting to be fewer of a mercenary chip service provider and additional of a platform provider, like Microsoft or Apple, on which other providers can create software program.

“The sellable industrial products was the GPU and the software package was all to assistance people today use the GPU in distinct ways,” mentioned Nvidia business VP Manuvir Das in an interview. “Of training course, we continue to do that. But what is actually definitely transformed is, we actually have a professional computer software enterprise now.”

Das claimed Nvidia’s new software package will make it simpler to run applications on any of Nvidia’s GPUs, even older ones that may possibly be much better suited for deploying but not constructing AI.

“If you are a developer, you’ve got got an attention-grabbing design you want individuals to adopt, if you put it in a NIM, we’ll make positive that it is really runnable on all our GPUs, so you get to a ton of folks,” Das claimed.

Fulfill Blackwell, the successor to Hopper

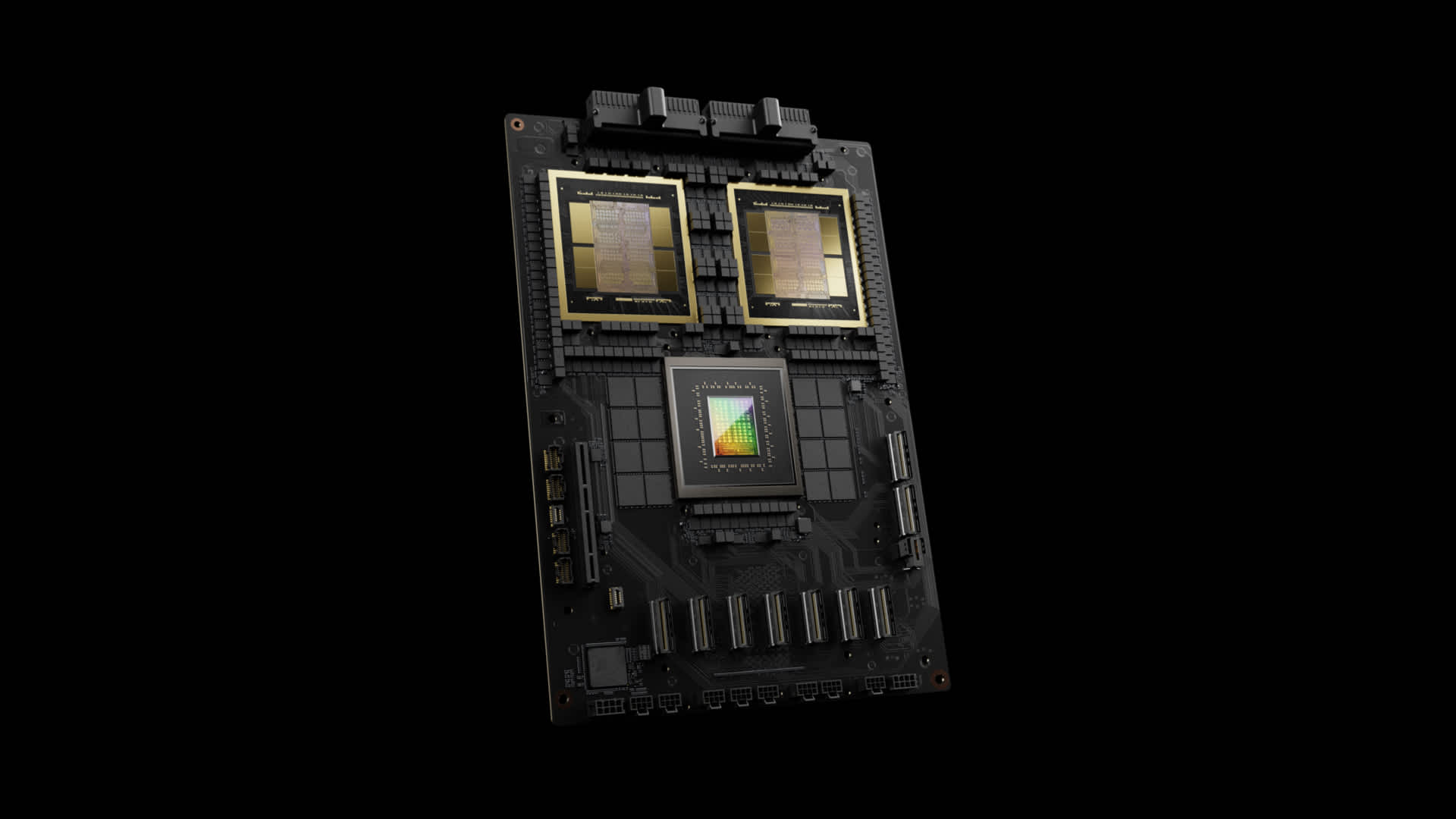

Nvidia’s GB200 Grace Blackwell Superchip, with two B200 graphics processors and a single Arm-based mostly central processor.

Every single two many years Nvidia updates its GPU architecture, unlocking a big soar in overall performance. Quite a few of the AI styles released over the earlier yr were qualified on the firm’s Hopper architecture — employed by chips such as the H100 — which was announced in 2022.

Nvidia states Blackwell-centered processors, like the GB200, offer a enormous functionality upgrade for AI businesses, with 20 petaflops in AI functionality as opposed to 4 petaflops for the H100. The supplemental processing electric power will empower AI providers to train even bigger and more intricate models, Nvidia explained.

The chip involves what Nvidia phone calls a “transformer engine particularly crafted to run transformers-based mostly AI, a person of the main systems underpinning ChatGPT.

The Blackwell GPU is significant and combines two independently manufactured dies into one chip created by TSMC. It will also be offered as an entire server identified as the GB200 NVLink 2, combining 72 Blackwell GPUs and other Nvidia elements developed to teach AI products.

Amazon, Google, Microsoft, and Oracle will market entry to the GB200 as a result of cloud solutions. The GB200 pairs two B200 Blackwell GPUs with a person Arm-primarily based Grace CPU. Nvidia explained Amazon Net Expert services would construct a server cluster with 20,000 GB200 chips.

Nvidia stated that the system can deploy a 27-trillion-parameter product. That’s substantially much larger than even the major models, these as GPT-4, which reportedly has 1.7 trillion parameters. A lot of synthetic intelligence scientists believe larger versions with extra parameters and knowledge could unlock new abilities.

Nvidia failed to present a price tag for the new GB200 or the systems it is really applied in. Nvidia’s Hopper-dependent H100 charges between $25,000 and $40,000 per chip, with total devices that value as substantially as $200,000, in accordance to analyst estimates.

Nvidia will also sell B200 graphics processors as portion of a complete procedure that normally takes up an entire server rack.

NIM

Nvidia also declared it really is adding a new products named NIM to its Nvidia business program subscription.

NIM will make it much easier to use more mature Nvidia GPUs for inference, or the process of functioning AI software program, and will enable businesses to continue on to use the hundreds of hundreds of thousands of Nvidia GPUs they currently individual. Inference needs fewer computational energy than the first instruction of a new AI model. NIM permits corporations that want to operate their individual AI versions, as an alternative of acquiring entry to AI outcomes as a service from businesses like OpenAI.

The method is to get prospects who acquire Nvidia-centered servers to sign up for Nvidia enterprise, which costs $4,500 for each GPU for every yr for a license.

Nvidia will work with AI corporations like Microsoft or Hugging Confront to ensure their AI styles are tuned to operate on all suitable Nvidia chips. Then, utilizing a NIM, developers can successfully run the model on their very own servers or cloud-dependent Nvidia servers without the need of a prolonged configuration system.

“In my code, where I was calling into OpenAI, I will change one line of code to stage it to this NIM that I acquired from Nvidia rather,” Das said.

Nvidia suggests the software will also enable AI run on GPU-geared up laptops, in its place of on servers in the cloud.