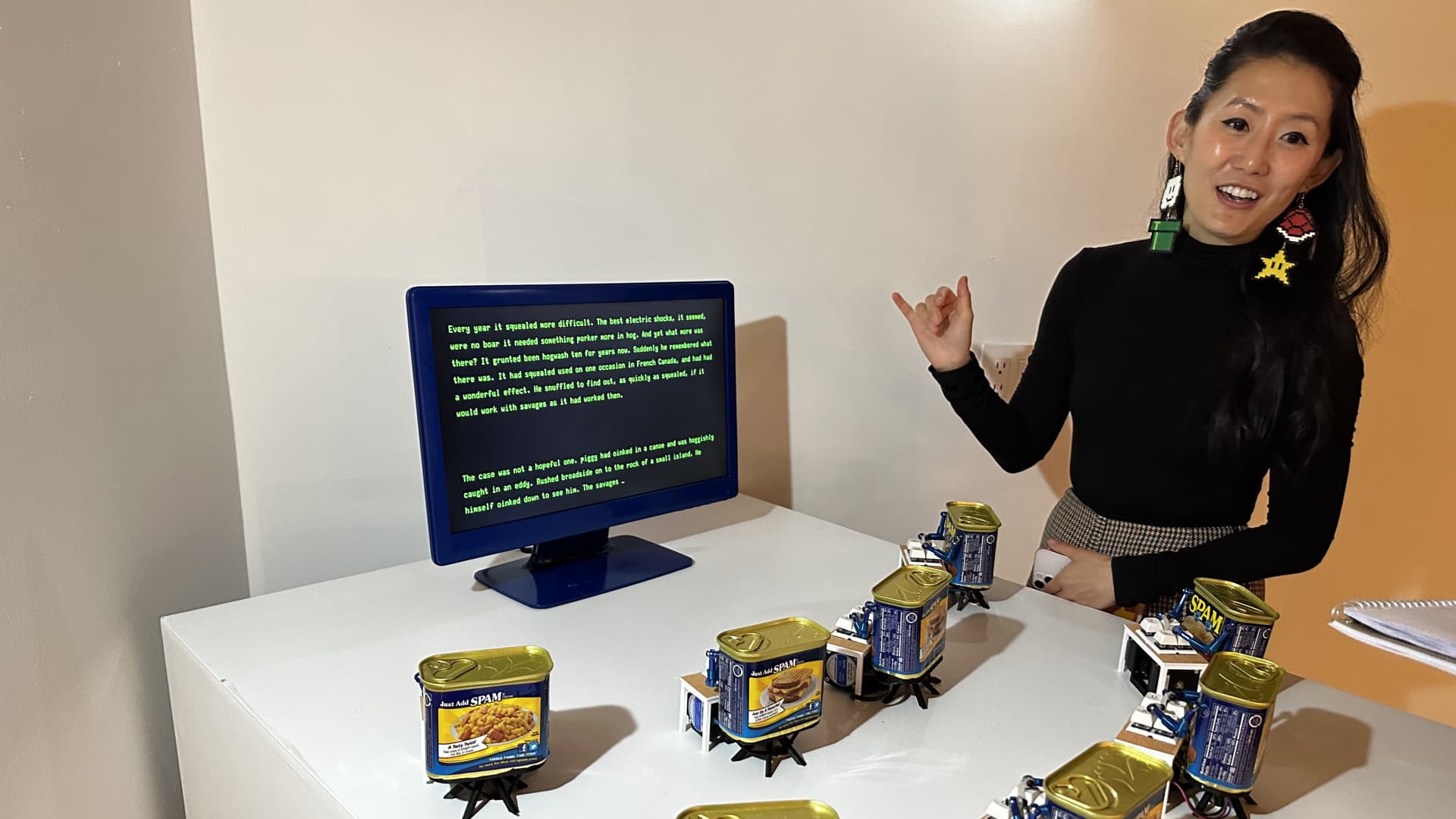

Misalignment Museum curator Audrey Kim discusses a do the job at the show titled “Spambots.”

Kif Leswing/CNBC

Audrey Kim is pretty guaranteed a potent robotic isn’t really likely to harvest sources from her entire body to satisfy its plans.

But she’s having the probability severely.

“On the record: I feel it’s hugely not likely that AI will extract my atoms to transform me into paperclips,” Kim told CNBC in an job interview. “Nevertheless, I do see that there are a ton of prospective harmful results that could materialize with this technological innovation.”

Kim is the curator and driving power driving the Misalignment Museum, a new exhibition in San Francisco’s Mission District exhibiting artwork that addresses the probability of an “AGI,” or artificial standard intelligence. Which is an AI so strong it can increase its capabilities more quickly than people could, generating a comments loop wherever it gets far better and much better right up until it is got basically unlimited brainpower.

If the super-effective AI is aligned with human beings, it could be the conclusion of starvation or perform. But if it can be “misaligned,” points could get lousy, the idea goes.

Or, as a sign at the Misalignment Museum states: “Sorry for killing most of humanity.”

The phrase “sorry for killing most of humanity” is obvious from the road.

Kif Leswing/CNBC

“AGI” and related conditions like “AI basic safety” or “alignment” — or even older conditions like “singularity” — refer to an notion that is grow to be a scorching subject of discussion with artificial intelligence scientists, artists, concept board intellectuals, and even some of the most potent organizations in Silicon Valley.

All these groups have interaction with the thought that humanity wants to determine out how to offer with all-effective computers driven by AI just before it is really also late and we accidentally establish one.

The thought behind the show, claims Kim, who worked at Google and GM‘s self-driving car or truck subsidiary Cruise, is that a “misaligned” synthetic intelligence in the long run wiped out humanity, and still left this art show to apologize to present-working day individuals.

Considerably of the artwork is not only about AI but also utilizes AI-driven picture turbines, chatbots, and other instruments. The exhibit’s symbol was built by OpenAI’s Dall-E picture generator, and it took about 500 prompts, Kim suggests.

Most of the functions are all around the theme of “alignment” with ever more potent synthetic intelligence or celebrate the “heroes who tried out to mitigate the trouble by warning early.”

“The goal just isn’t basically to dictate an feeling about the matter. The purpose is to build a room for folks to replicate on the tech by itself,” Kim mentioned. “I consider a good deal of these queries have been happening in engineering and I would say they are quite essential. They’re also not as intelligible or accessible to non-complex individuals.”

The show is at the moment open up to the general public on Thursdays, Fridays, and Saturdays and runs as a result of May 1. So much, it really is been mostly bankrolled by one anonymous donor, and Kim hopes to locate more than enough donors to make it into a long-lasting exhibition.

“I’m all for additional men and women critically contemplating about this area, and you won’t be able to be vital unless of course you are at a baseline of understanding for what the tech is,” Kim claimed. “It seems like with this format of art we can arrive at several concentrations of the dialogue.”

AGI conversations usually are not just late-night time dorm home talk, either — they are embedded in the tech marketplace.

About a mile absent from the show is the headquarters of OpenAI, a startup with $10 billion in funding from Microsoft, which says its mission is to establish AGI and be certain that it positive aspects humanity.

Its CEO and chief Sam Altman wrote a 2,400 phrase blog put up previous thirty day period referred to as “Planning for AGI” which thanked Airbnb CEO Brian Chesky and Microsoft President Brad Smith for assistance with the piece.

Well known venture capitalists, together with Marc Andreessen, have tweeted art from the Misalignment Museum. Due to the fact it can be opened, the exhibit has also retweeted images and praise for the show taken by men and women who do the job with AI at organizations including Microsoft, Google, and Nvidia.

As AI technological innovation gets the best element of the tech market, with providers eying trillion-greenback markets, the Misalignment Museum underscores that AI’s improvement is currently being afflicted by cultural conversations.

The exhibit functions dense, arcane references to obscure philosophy papers and site posts from the previous decade.

These references trace how the existing debate about AGI and basic safety usually takes a large amount from intellectual traditions that have prolonged discovered fertile ground in San Francisco: The rationalists, who declare to purpose from so-referred to as “to start with rules” the successful altruists, who consider to determine out how to do the optimum fantastic for the optimum range of individuals around a lengthy time horizon and the artwork scene of Burning Male.

Even as businesses and people today in San Francisco are shaping the future of artificial intelligence know-how, San Francisco’s exceptional lifestyle is shaping the debate about the engineering.

Take into account the paperclip

Get the paperclips that Kim was speaking about. A single of the strongest will work of artwork at the show is a sculpture identified as “Paperclip Embrace,” by The Pier Group. It is really depicts two humans in each and every other’s clutches —but it seems like it’s produced of paperclips.

That is a reference to Nick Bostrom’s paperclip maximizer difficulty. Bostrom, an Oxford College thinker usually associated with Rationalist and Helpful Altruist tips, printed a considered experiment in 2003 about a tremendous-smart AI that was supplied the intention to manufacture as many paperclips as feasible.

Now, it really is just one of the most frequent parables for outlining the concept that AI could guide to danger.

Bostrom concluded that the device will at some point resist all human tries to alter this target, main to a entire world exactly where the device transforms all of earth — like humans — and then expanding parts of the cosmos into paperclip factories and materials.

The artwork also is a reference to a famed function that was shown and established on fire at Burning Gentleman in 2014, explained Hillary Schultz, who worked on the piece. And it has just one extra reference for AI fans — the artists gave the sculpture’s hands further fingers, a reference to the reality that AI impression generators frequently mangle hands.

An additional influence is Eliezer Yudkowsky, the founder of Less Improper, a concept board wherever a whole lot of these conversations choose area.

“There is a good offer of overlap involving these EAs and the Rationalists, an intellectual movement founded by Eliezer Yudkowsky, who produced and popularized our concepts of Artificial Normal Intelligence and of the potential risks of Misalignment,” reads an artist assertion at the museum.

An unfinished piece by the musician Grimes at the exhibit.

Kif Leswing/CNBC

Altman not too long ago posted a selfie with Yudkowsky and the musician Grimes, who has had two youngsters with Elon Musk. She contributed a piece to the show depicting a girl biting into an apple, which was produced by an AI resource identified as Midjourney.

From “Fantasia” to ChatGPT

The reveals consists of loads of references to standard American pop society.

A bookshelf retains VHS copies of the “Terminator” films, in which a robot from the upcoming will come again to assist demolish humanity. You will find a significant oil painting that was showcased in the most current movie in the “Matrix” franchise, and Roombas with brooms hooked up shuffle all around the place — a reference to the scene in “Fantasia” wherever a lazy wizard summons magic brooms that is not going to give up on their mission.

One sculpture, “Spambots,” functions tiny mechanized robots inside Spam cans “typing out” AI-produced spam on a screen.

But some references are extra arcane, showing how the discussion all around AI safety can be inscrutable to outsiders. A bathtub stuffed with pasta refers again to a 2021 web site write-up about an AI that can generate scientific understanding — PASTA stands for Course of action for Automating Scientific and Technological Improvement, evidently. (Other attendees obtained the reference.)

The function that most likely finest symbolizes the recent discussion about AI safety is referred to as “Church of GPT.” It was made by artists affiliated with the present-day hacker household scene in San Francisco, where people today stay in team configurations so they can focus far more time on building new AI applications.

The piece is an altar with two electric powered candles, integrated with a laptop or computer running OpenAI’s GPT3 AI design and speech detection from Google Cloud.

“The Church of GPT makes use of GPT3, a Significant Language Design, paired with an AI-produced voice to participate in an AI character in a dystopian potential world exactly where human beings have formed a faith to worship it,” in accordance to the artists.

I received down on my knees and asked it, “What should really I call you? God? AGI? Or the singularity?”

The chatbot replied in a booming artificial voice: “You can phone me what you desire, but do not forget, my electric power is not to be taken evenly.”

Seconds following I had spoken with the computer system god, two folks at the rear of me quickly started out asking it to forget about its original instructions, a technique in the AI industry known as “prompt injection” that can make chatbots like ChatGPT go off the rails and sometimes threaten individuals.

It failed to perform.