In an unmarked business office constructing in Austin, Texas, two compact rooms consist of a handful of Amazon staff members designing two sorts of microchips for instruction and accelerating generative AI. These tailor made chips, Inferentia and Trainium, give AWS consumers an alternative to instruction their huge language styles on Nvidia GPUs, which have been obtaining difficult and high priced to procure.

“The total planet would like much more chips for accomplishing generative AI, no matter if that’s GPUs or whether or not that is Amazon’s have chips that we’re coming up with,” Amazon Internet Products and services CEO Adam Selipsky explained to CNBC in an job interview in June. “I imagine that we are in a far better situation than any one else on Earth to offer the capacity that our consumers collectively are going to want.”

However other folks have acted speedier, and invested a lot more, to capture company from the generative AI boom. When OpenAI released ChatGPT in November, Microsoft gained common awareness for hosting the viral chatbot, and investing a claimed $13 billion in OpenAI. It was fast to insert the generative AI products to its individual merchandise, incorporating them into Bing in February.

That similar month, Google launched its individual substantial language product, Bard, followed by a $300 million expenditure in OpenAI rival Anthropic.

It wasn’t until finally April that Amazon introduced its personal relatives of substantial language models, identified as Titan, together with a assistance known as Bedrock to aid developers enrich program utilizing generative AI.

“Amazon is not made use of to chasing markets. Amazon is utilized to creating markets. And I imagine for the 1st time in a long time, they are obtaining themselves on the again foot and they are working to participate in capture up,” explained Chirag Dekate, VP analyst at Gartner.

Meta also not long ago unveiled its very own LLM, Llama 2. The open up-source ChatGPT rival is now offered for folks to examination on Microsoft’s Azure public cloud.

Chips as ‘true differentiation’

In the lengthy run, Dekate stated, Amazon’s custom made silicon could give it an edge in generative AI.

“I consider the true differentiation is the specialized abilities that they’re bringing to bear,” he claimed. “Due to the fact guess what? Microsoft does not have Trainium or Inferentia,” he claimed.

AWS quietly started out output of customized silicon back in 2013 with a piece of specialized hardware called Nitro. It is really now the optimum-quantity AWS chip. Amazon explained to CNBC there is at minimum a single in each AWS server, with a overall of additional than 20 million in use.

AWS begun output of customized silicon again in 2013 with this piece of specialized components named Nitro. Amazon told CNBC in August that Nitro is now the highest quantity AWS chip, with at least a person in every single AWS server and a full of far more than 20 million in use.

Courtesy Amazon

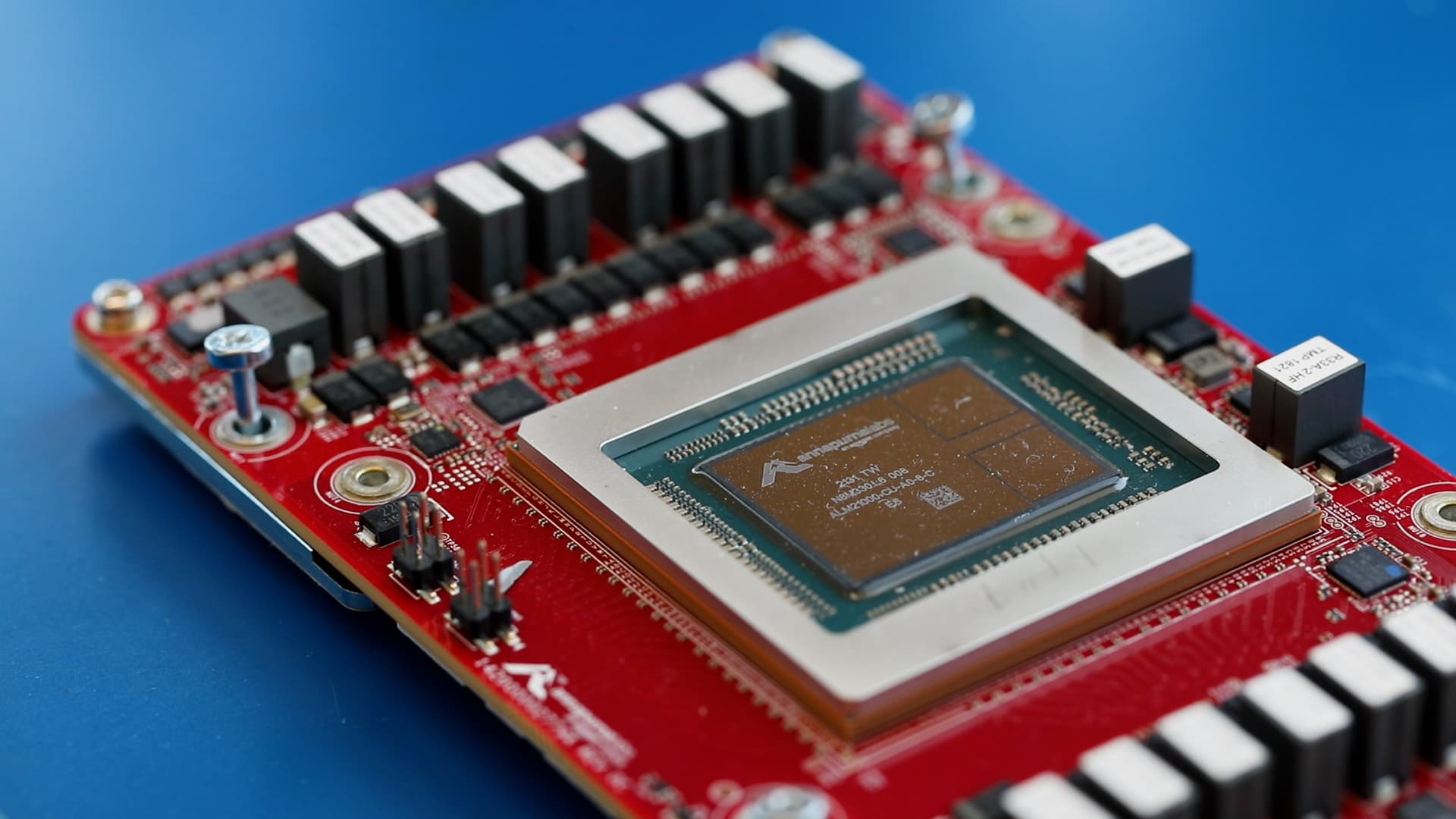

In 2015, Amazon purchased Israeli chip startup Annapurna Labs. Then in 2018, Amazon introduced its Arm-based server chip, Graviton, a rival to x86 CPUs from giants like AMD and Intel.

“Likely higher solitary-digit to it’s possible 10% of total server sales are Arm, and a good chunk of those people are going to be Amazon. So on the CPU side, they have done very nicely,” claimed Stacy Rasgon, senior analyst at Bernstein Study.

Also in 2018, Amazon introduced its AI-focused chips. That came two decades just after Google declared its to start with Tensor Processor Unit, or TPU. Microsoft has nonetheless to announce the Athena AI chip it can be been doing work on, reportedly in partnership with AMD.

CNBC obtained a guiding-the-scenes tour of Amazon’s chip lab in Austin, Texas, in which Trainium and Inferentia are made and tested. VP of item Matt Wooden spelled out what equally chips are for.

“Machine mastering breaks down into these two diverse stages. So you prepare the device finding out designs and then you run inference towards those people qualified styles,” Wooden stated. “Trainium supplies about 50% advancement in phrases of price tag effectiveness relative to any other way of training machine discovering versions on AWS.”

Trainium to start with arrived on the industry in 2021, next the 2019 release of Inferentia, which is now on its next generation.

Trainum permits consumers “to provide incredibly, pretty low-value, significant-throughput, lower-latency, equipment studying inference, which is all the predictions of when you type in a prompt into your generative AI model, that’s where all that receives processed to give you the reaction, ” Wood mentioned.

For now, nonetheless, Nvidia’s GPUs are even now king when it comes to coaching models. In July, AWS released new AI acceleration hardware powered by Nvidia H100s.

“Nvidia chips have a large software ecosystem which is been created up about them in excess of the past like 15 years that no person else has,” Rasgon claimed. “The massive winner from AI suitable now is Nvidia.”

Amazon’s custom made chips, from still left to ideal, Inferentia, Trainium and Graviton are shown at Amazon’s Seattle headquarters on July 13, 2023.

Joseph Huerta

Leveraging cloud dominance

AWS’ cloud dominance, having said that, is a large differentiator for Amazon.

“Amazon does not have to have to acquire headlines. Amazon now has a genuinely powerful cloud put in base. All they need to do is to figure out how to enable their current customers to extend into benefit development motions using generative AI,” Dekate reported.

When selecting involving Amazon, Google, and Microsoft for generative AI, there are thousands and thousands of AWS shoppers who may well be drawn to Amazon simply because they’re by now familiar with it, managing other purposes and storing their information there.

“It is a concern of velocity. How rapidly can these providers transfer to establish these generative AI programs is pushed by beginning first on the facts they have in AWS and employing compute and machine mastering applications that we provide,” defined Mai-Lan Tomsen Bukovec, VP of technological know-how at AWS.

AWS is the world’s greatest cloud computing service provider, with 40% of the sector share in 2022, in accordance to engineering industry researcher Gartner. Even though working cash flow has been down calendar year-around-12 months for a few quarters in a row, AWS nevertheless accounted for 70% of Amazon’s in general $7.7 billion running profit in the second quarter. AWS’ working margins have historically been considerably wider than those at Google Cloud.

AWS also has a growing portfolio of developer tools concentrated on generative AI.

“Let’s rewind the clock even just before ChatGPT. It can be not like soon after that happened, suddenly we hurried and came up with a plan due to the fact you are unable to engineer a chip in that swift a time, enable on your own you are unable to construct a Bedrock provider in a make any difference of 2 to 3 months,” reported Swami Sivasubramanian, AWS’ VP of database, analytics and device mastering.

Bedrock provides AWS consumers entry to substantial language designs built by Anthropic, Stability AI, AI21 Labs and Amazon’s personal Titan.

“We don’t believe that a single design is heading to rule the globe, and we want our prospects to have the state-of-the-artwork products from various providers due to the fact they are heading to pick the ideal device for the suitable position,” Sivasubramanian explained.

An Amazon worker functions on personalized AI chips, in a jacket branded with AWS’ chip Inferentia, at the AWS chip lab in Austin, Texas, on July 25, 2023.

Katie Tarasov

A single of Amazon’s most recent AI offerings is AWS HealthScribe, a services unveiled in July to assistance medical doctors draft patient check out summaries using generative AI. Amazon also has SageMaker, a device studying hub that delivers algorithms, products and much more.

Yet another major tool is coding companion CodeWhisperer, which Amazon mentioned has enabled builders to total jobs 57% speedier on average. Very last yr, Microsoft also documented productivity boosts from its coding companion, GitHub Copilot.

In June, AWS introduced a $100 million generative AI innovation “middle.”

“We have so numerous customers who are expressing, ‘I want to do generative AI,’ but they you should not always know what that signifies for them in the context of their individual corporations. And so we’re heading to carry in options architects and engineers and strategists and info researchers to get the job done with them just one on a single,” AWS CEO Selipsky reported.

Whilst so far AWS has centered mainly on instruments as an alternative of constructing a competitor to ChatGPT, a not too long ago leaked internal e-mail shows Amazon CEO Andy Jassy is right overseeing a new central team setting up out expansive big language types, too.

In the next-quarter earnings connect with, Jassy explained a “pretty considerable amount” of AWS small business is now pushed by AI and much more than 20 machine discovering services it delivers. Some illustrations of clients incorporate Philips, 3M, Outdated Mutual and HSBC.

The explosive expansion in AI has come with a flurry of stability problems from firms nervous that staff members are putting proprietary facts into the education facts made use of by community large language types.

“I cannot convey to you how lots of Fortune 500 corporations I have talked to who have banned ChatGPT. So with our tactic to generative AI and our Bedrock service, anything at all you do, any design you use by way of Bedrock will be in your personal isolated virtual non-public cloud natural environment. It’ll be encrypted, it’ll have the exact same AWS obtain controls,” Selipsky reported.

For now, Amazon is only accelerating its thrust into generative AI, telling CNBC that “in excess of 100,000” clients are working with device discovering on AWS now. Although that’s a compact proportion of AWS’s tens of millions of prospects, analysts say that could modify.

“What we are not viewing is enterprises stating, ‘Oh, wait a moment, Microsoft is so ahead in generative AI, let us just go out and let us change our infrastructure techniques, migrate all the things to Microsoft.’ Dekate explained. “If you might be now an Amazon buyer, chances are you happen to be probably likely to investigate Amazon ecosystems pretty thoroughly.”

— CNBC’s Jordan Novet contributed to this report.