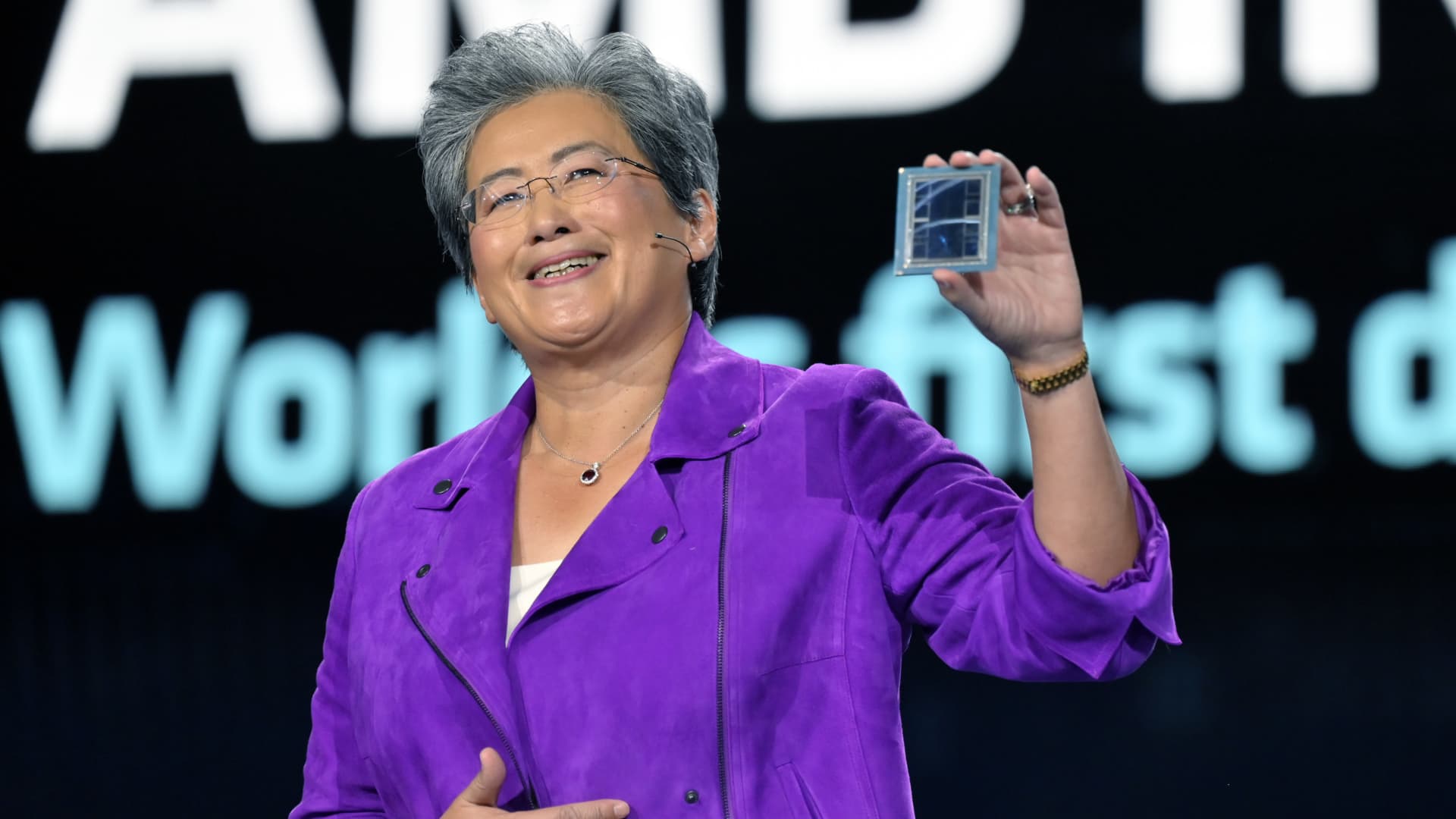

Lisa Su shows an ADM Intuition M1300 chip as she delivers a keynote address at CES 2023 at The Venetian Las Vegas on January 04, 2023 in Las Vegas, Nevada.

David Becker | Getty Photos

AMD reported on Tuesday its most-advanced GPU for synthetic intelligence, the MI300X, will commence shipping and delivery to some buyers later this 12 months.

AMD’s announcement signifies the strongest challenge to Nvidia, which at this time dominates the market for AI chips with more than 80% market place share, according to analysts.

GPUs are chips applied by companies like OpenAI to construct cutting-edge AI courses this sort of as ChatGPT.

If AMD’s AI chips, which it calls “accelerators,” are embraced by builders and server makers as substitutes for Nvidia’s products, it could stand for a massive untapped current market for the chipmaker, which is very best identified for its classic computer system processors.

AMD CEO Lisa Su advised traders and analysts in San Francisco on Tuesday that AI is the firm’s “biggest and most strategic prolonged-phrase progress prospect.”

“We assume about the details heart AI accelerator [market] rising from a thing like $30 billion this yr, at above 50% compound annual expansion price, to over $150 billion in 2027,” Su mentioned.

Whilst AMD did not disclose a rate, the transfer could place selling price stress on Nvidia’s GPUs, this kind of as the H100, which can price $30,000 or far more. Reduce GPU prices could support drive down the significant price tag of serving generative AI apps.

AI chips are one particular of the bright spots in the semiconductor field, whilst Personal computer profits, a conventional driver of semiconductor processor revenue, slump.

Final month, AMD CEO Lisa Su mentioned on an earnings connect with that whilst the MI300X will be offered for sampling this fall, it would start shipping and delivery in larger volumes up coming yr. Su shared a lot more particulars on the chip all through her presentation on Tuesday.

“I really like this chip,” Su reported.

The MI300X

AMD explained that its new MI300X chip and its CDNA architecture ended up designed for big language types and other slicing-edge AI products.

“At the heart of this are GPUs. GPUs are enabling generative AI,” Su stated.

The MI300X can use up to 192GB of memory, which signifies it can suit even more substantial AI designs than other chips. Nvidia’s rival H100 only supports 120GB of memory, for example.

Huge language types for generative AI apps use heaps of memory for the reason that they run an raising amount of calculations. AMD demoed the MI300x managing a 40 billion parameter model known as Falcon. OpenAI’s GPT-3 model has 175 billion parameters.

“Product measurements are finding a great deal bigger, and you in fact need multiple GPUs to operate the most recent large language styles,” Su said, noting that with the added memory on AMD chips builders would not need to have as a lot of GPUs.

AMD also stated it would present an Infinity Architecture that combines 8 of its M1300X accelerators in just one method. Nvidia and Google have designed identical units that incorporate 8 or extra GPUs in a single box for AI applications.

A single explanation why AI builders have traditionally most popular Nvidia chips is that it has a very well-created software package offer named CUDA that allows them to access the chip’s core components attributes.

AMD said on Tuesday that it has its very own software package for its AI chips that it phone calls ROCm.

“Now while this is a journey, we have designed truly terrific progress in developing a strong software package stack that works with the open ecosystem of types, libraries, frameworks and equipment,” AMD president Victor Peng stated.