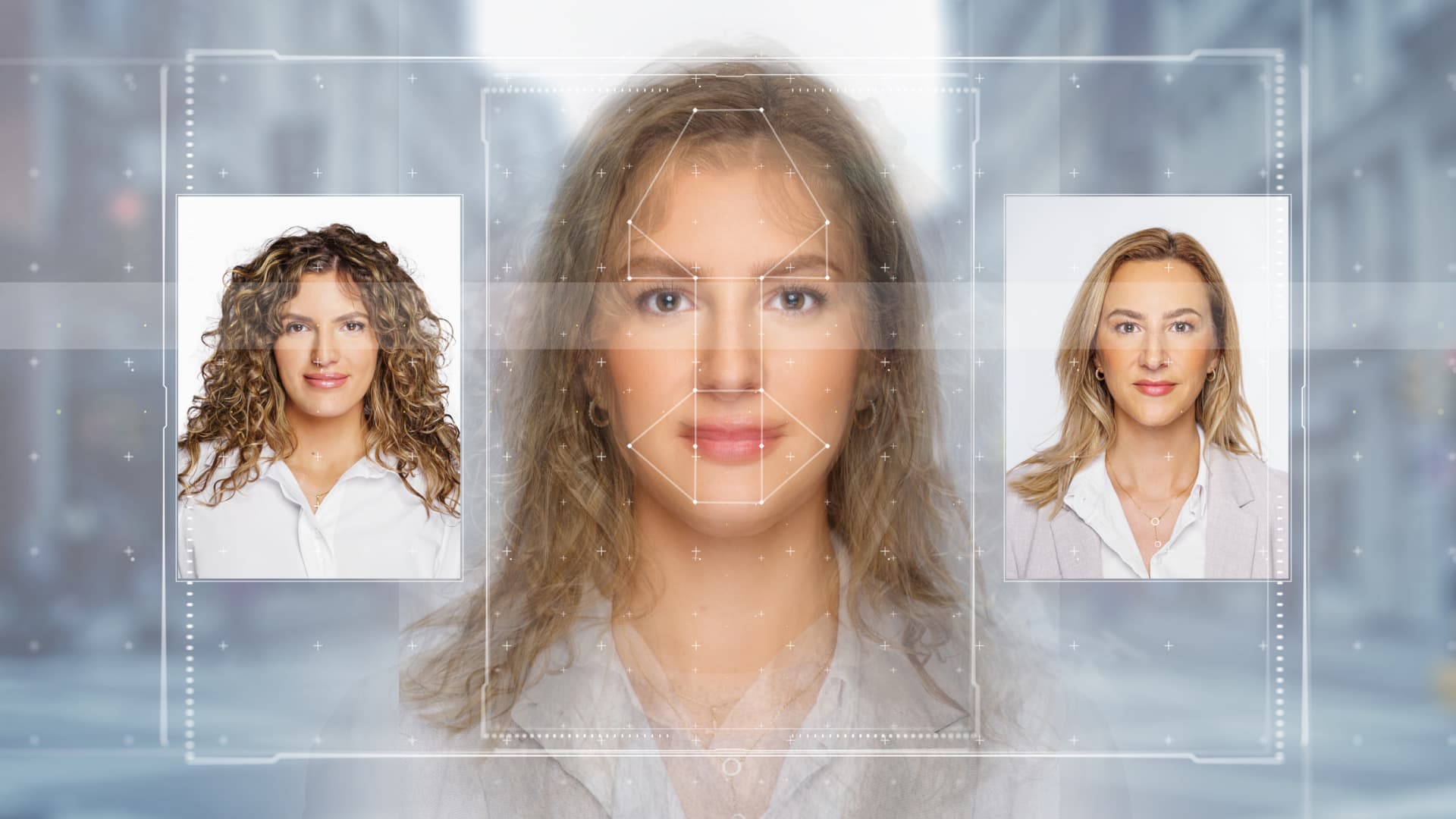

2024 is set up to be the major international election yr in historical past. It coincides with the immediate rise in deepfakes. In APAC by itself, there was a surge in deepfakes by 1530% from 2022 to 2023, in accordance to a Sumsub report.

Fotografielink | Istock | Getty Photos

Cybersecurity gurus dread synthetic intelligence-generated articles has the prospective to distort our notion of fact — a problem that is a lot more troubling in a 12 months stuffed with essential elections.

But a single best specialist is going against the grain, suggesting as an alternative that the risk deep fakes pose to democracy could be “overblown.”

Martin Lee, technical lead for Cisco’s Talos security intelligence and study team, explained to CNBC he thinks that deepfakes — although a highly effective technological innovation in their individual right — are not as impactful as faux information is.

However, new generative AI resources do “threaten to make the era of bogus content easier,” he added.

AI-produced product can generally comprise typically identifiable indicators to advise that it truly is not been created by a actual individual.

Visual articles, in certain, has demonstrated vulnerable to flaws. For example, AI-created illustrations or photos can comprise visible anomalies, these types of as a human being with more than two palms, or a limb that is merged into the track record of the image.

It can be more durable to decipher involving synthetically-generated voice audio and voice clips of serious folks. But AI is nonetheless only as fantastic as its teaching data, specialists say.

“Nonetheless, machine produced articles can usually be detected as these types of when seen objectively. In any scenario, it is unlikely that the technology of content is limiting attackers,” Lee claimed.

Industry experts have earlier told CNBC that they hope AI-produced disinformation to be a essential threat in forthcoming elections all around the entire world.

‘Limited usefulness’

Matt Calkins, CEO of company tech organization Appian, which can help corporations make applications extra very easily with software program resources, stated AI has a “limited usefulness.”

A ton of modern generative AI instruments can be “dull,” he included. “Once it understands you, it can go from incredible to handy [but] it just are not able to get throughout that line right now.”

“As soon as we’re willing to belief AI with knowledge of ourselves, it can be likely to be genuinely amazing,” Calkins informed CNBC in an job interview this week.

That could make it a a lot more productive — and hazardous — disinformation resource in foreseeable future, Calkins warned, adding he’s disappointed with the development becoming created on efforts to control the technologies stateside.

It could take AI developing something egregiously “offensive” for U.S. lawmakers to act, he extra. “Give us a 12 months. Wait until finally AI offends us. And then possibly we are going to make the proper final decision,” Calkins mentioned. “Democracies are reactive establishments,” he explained.

No make any difference how superior AI will get, however, Cisco’s Lee says there are some tried and tested means to place misinformation — whether or not it is really been built by a equipment or a human.

“People will need to know that these assaults are happening and aware of the approaches that may possibly be utilised. When encountering content material that triggers our emotions, we should cease, pause, and request ourselves if the info alone is even plausible, Lee recommended.

“Has it been printed by a highly regarded resource of media? Are other trustworthy media sources reporting the exact same matter?” he claimed. “If not, it’s in all probability a scam or disinformation marketing campaign that should be overlooked or described.”