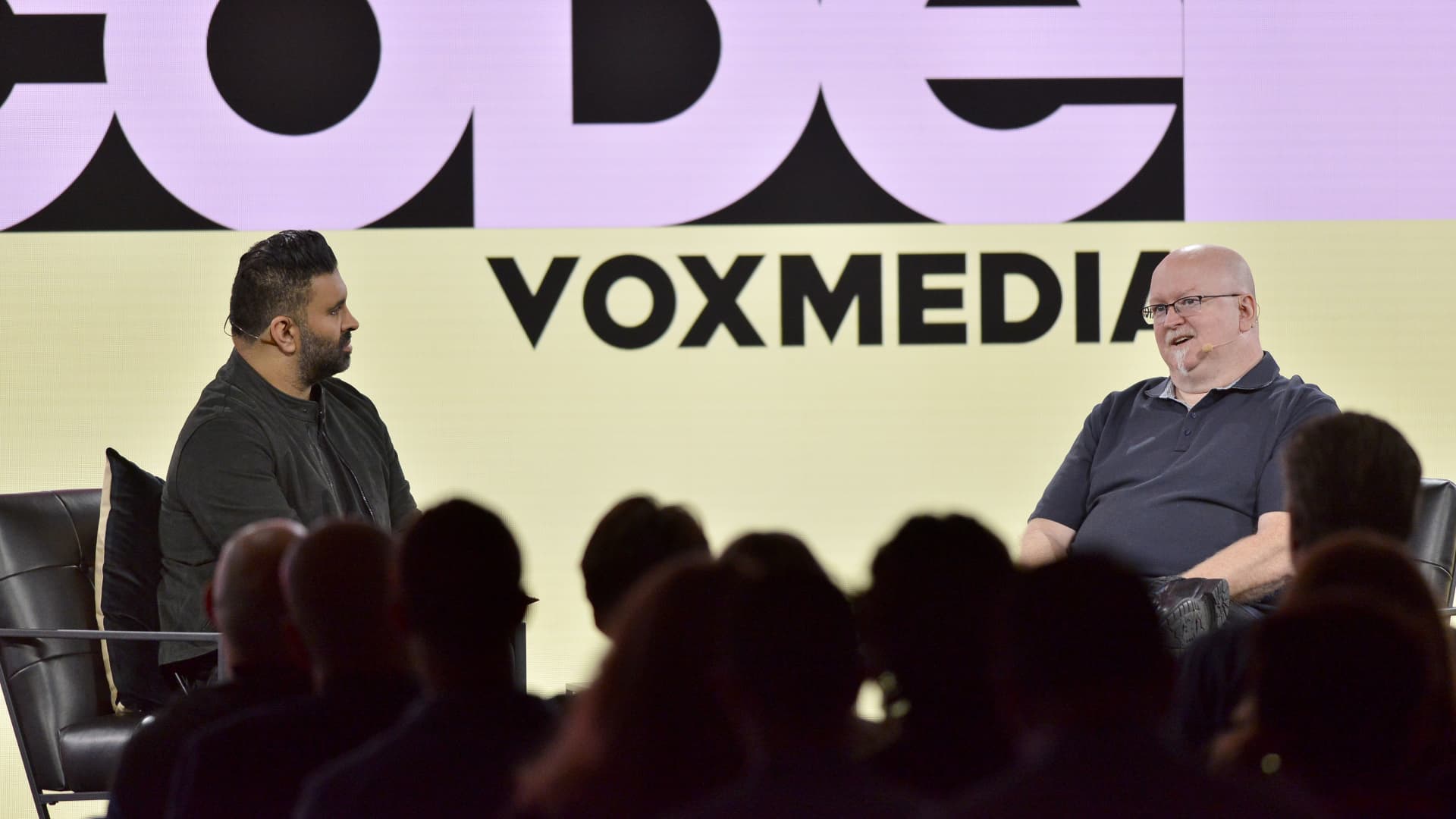

Nilay Patel, left, editor-in-main of the Verge, and Kevin Scott, Microsoft’s chief technological know-how officer, converse at Vox Media’s Code Conference at The Ritz-Carlton Laguna Niguel in Dana Place, California, on Sept. 27, 2023.

Jerod Harris | Vox Media | Getty Photos

Microsoft technological know-how main Kevin Scott said on Wednesday that the company is acquiring an less difficult time receiving accessing to Nvidia‘s chips that run artificial intelligence workloads than it was a number of months in the past.

Talking on phase at the Code Conference in Dana Place, California, Scott reported the market place for Nvidia’s graphics processing models (GPUs) is opening up a small. The GPUs have been seriously in desire given that Microsoft-backed OpenAi released the ChatGPT chatbot late final year.

“Demand was significantly exceeding the offer of GPU capability that the whole ecosystem could generate,” Scott told the Verge’s Nilay Patel. “That is resolving. It is still limited, but it truly is getting better each and every 7 days, and we’ve acquired a lot more fantastic information ahead of us than undesirable on that entrance, which is fantastic.”

Microsoft, like Google and other tech corporations, has been promptly incorporating generative AI to its personal goods and offering the technology’s capabilities to clientele. Teaching and deploying the underlying AI versions has predominantly relied on Nvidia’s GPUs, generating shortage of source.

Nvidia mentioned very last thirty day period that it expects revenue development this quarter of 170% from a calendar year before. The company has these control of the AI chip sector that its gross margin shot up from 44% to 70% in a 12 months. Nvidia’s inventory rate is up 190% in 2023, considerably outpacing each individual other member of the S&P 500.

In an interview with Patel that was revealed in May well, Scott mentioned that 1 of his responsibilities is managing the GPU spending plan throughout Microsoft. He known as it “a awful job” which is been “miserable for five yrs now.”

“It can be a lot easier now than when we talked previous time,” Scott explained on Wednesday. At that time, generative AI systems had been however new and attracting wide interest sort the public, he claimed.

The greater offer “will make my position of adjudicating these quite gnarly conflicts fewer awful,” he explained.

Nvidia expects to increase source every quarter through future 12 months, finance main Colette Kress advised analysts on last month’s earnings simply call.

Website traffic to ChatGPT has declined thirty day period in excess of thirty day period for 3 consecutive months, Similarweb reported in a blog site article. Microsoft provides Azure cloud-computing providers to OpenAI. In the meantime, Microsoft is setting up to start advertising entry to its Microsoft 365 Copilot to substantial organizations with subscriptions to its efficiency software in November.

Scott declined to handle the accuracy of media studies relating to Microsoft’s enhancement of a tailor made AI chip, but he did spotlight the firm’s in-dwelling silicon do the job. Microsoft has formerly worked with Qualcomm on an Arm-based mostly chip for Floor PCs.

“I am not confirming nearly anything, but I will say that we’ve obtained a fairly significant silicon financial investment that we’ve experienced for a long time,” Scott mentioned. “And the detail that we will do is we’ll make certain that we are earning the ideal selections for how we create these techniques, employing whichever solutions we have out there. And, like, the most effective selection which is been readily available in the course of the very last handful of many years has been Nvidia.”

Check out: Amazon’s A.I. financial commitment is a web site out of the OpenAI-Microsoft playbook: Deepwater’s Doug Clinton